Structuring Pipelines

Right now, our pipeline is a single-task job. Such monolyth setup is more difficult to iterate on because a small change to the testing portion may have undesired effects on the build portion. And when things actually do break, a modular setup makes it easier to debug. More structure in the pipeline will enable features such putting semver in our artifacts and repo tags.

Separating Jobs

First, let's break our solitary pipeline job down into separate tasks:

...jobs:- name: test-and-buildplan:- get: code-repotrigger: true- task: unit-testconfig:image_resource:type: docker-imagesource:repository: golangtag: 1.19-bullseyeinputs:- name: code-repooutputs:- name: buildplatform: linuxrun:path: /bin/shargs:- -c- |set -euxcd code-repo# Setup dependenciesgo mod tidy# Install the test frameworkgo get github.com/onsi/ginkgo/v2/ginkgogo install github.com/onsi/ginkgo/v2/ginkgo# Test, looking for tests recursivelyginkgo -r- task: buildconfig:image_resource:type: docker-imagesource:repository: golangtag: 1.19-bullseyeinputs:- name: code-repooutputs:- name: buildplatform: linuxrun:path: /bin/shargs:- -c- |set -euxcd code-repo# Setup dependenciesgo mod tidy# Build and place in the output directory.go build -o ../build/fetcher-linux-amd64 cmd/main.go- put: binary-linuxparams:file: build/fetcher-linux-amd64...

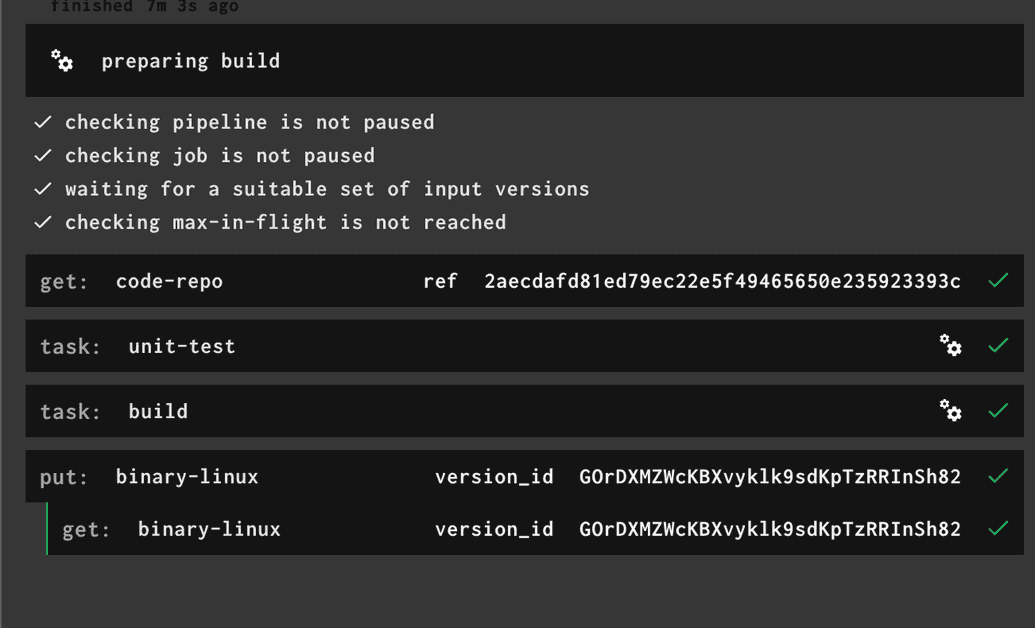

Go ahead and set-pipeline and re-run the job. The execution should now break down into 4 separate steps, like this:

Let's take it a step further and divide the job into two separate jobs: test and build

[...]jobs:- name: testplan:- get: code-repotrigger: true- task: unit-testconfig:image_resource:type: docker-imagesource:repository: golangtag: 1.19-bullseyeinputs:- name: code-repooutputs:- name: buildplatform: linuxrun:path: /bin/shargs:- -c- |set -euxcd code-repo# Setup dependenciesgo mod tidy# Install the test frameworkgo get github.com/onsi/ginkgo/v2/ginkgogo install github.com/onsi/ginkgo/v2/ginkgo# Test, looking for tests recursivelyginkgo -r- name: buildplan:- get: code-repotrigger: truepassed: [ test ]- task: buildconfig:image_resource:type: docker-imagesource:repository: golangtag: 1.19-bullseyeinputs:- name: code-repooutputs:- name: buildplatform: linuxrun:path: /bin/shargs:- -c- |set -euxcd code-repo# Setup dependenciesgo mod tidy# Build and place in the output directory.go build -o ../build/fetcher-linux-amd64 cmd/main.go- put: binary-linuxparams:file: build/fetcher-linux-amd64

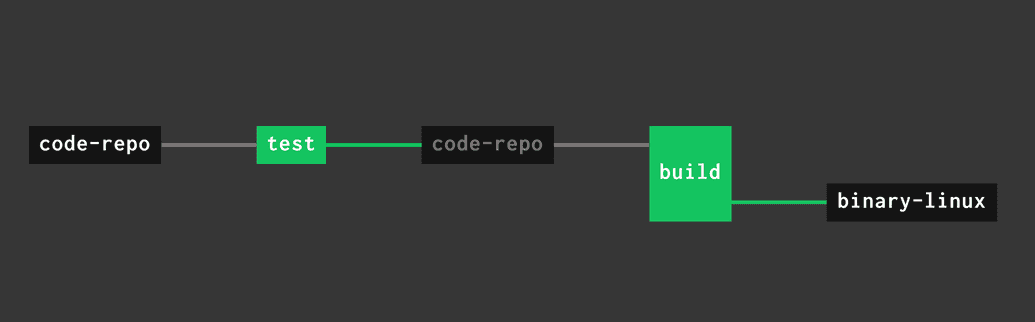

After the next set-pipeline the pipeline takes the following form:

Are you following along? Here is a bulletpoint list of things that changed:

- the

jobslist went from a singletest-and-buildentry to two job entries:testandbuild - We connected the sequence of jobs with the

code-reporesource by declaringpassedargument in thebuildjob, making it dependent on successful execution of thetestjob. - We attached the

putstep (upload of the binary to S3) to thebuildjob, sincetestjob doesn't produce the artifact as an output.

We so far we have doubled the size of our pipeline for no apparent gain. These changes will pay off as soon as we generalize the build task to produce binary artifacts for different architectures. But first, let's add semantic versioning.

Adding Semantic Versioning

SemVer is a popular versioning scheme and is considered a gold standard for software projects. To add semver to our project we need to do 3 things:

- Add a state resource keeping the version number. We'll use S3.

- Pass the resource in the build task.

- Use the resource in the build task.

- Update

binary-linuxresource to store each version as a separate file - On success, update the state of the bumped version

Step one: Add the following entry to the resources section of your pipeline:

- name: versiontype: semversource:driver: s3initial_version: 0.0.0bucket: concourse-farm-releaseskey: versionaccess_key_id: ((AWS_ACCESS_KEY_ID))secret_access_key: ((AWS_SECRET_ACCESS_KEY))region_name: ((AWS_DEFAULT_REGION))

Step two: Add get step with version resource to the build job:

[...]jobs:- name: buildplan:- get: code-repo+ - get: version+ params:+ bump: patch[...]

Step three: Weave in version into the binary name in the build task:

[...]- task: buildconfig:image_resource:type: docker-imagesource:repository: golangtag: 1.19-bullseyeinputs:+ - name: version- name: code-repooutputs:- name: buildplatform: linuxrun:path: /bin/shargs:- -c- |set -euxversion="$(cat version/version)"cd code-repo# Setup dependenciesgo mod tidy# Build and place in the output directory.+ go build -o "../build/fetcher-${version}-linux-amd64" cmd/main.go- go build -o ../build/fetcher-linux-amd64 cmd/main.go[...]

Step four: Correct the binary-linux resource to support multiple files

- name: binary-linuxtype: s3source:access_key_id: ((AWS_ACCESS_KEY_ID))secret_access_key: ((AWS_SECRET_ACCESS_KEY))region_name: ((AWS_DEFAULT_REGION))bucket: concourse-farm-releases- versioned_file: binaries/fetcher-linux-amd64+ regexp: binaries/linux/fetcher-(.*)-linux-amd64

Step five: Save updated version in the version file in S3

Add the put step at the end of the build job plan.

+ - put: version+ params: {file: version/version}

Go ahead and set-pipeline (don't forget to add -l creds.yml when setting pipeline).

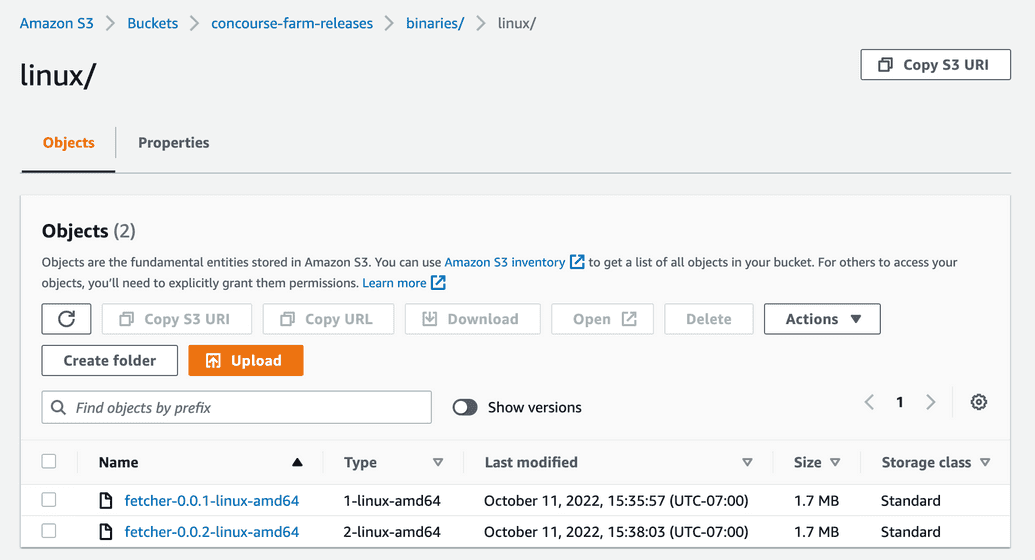

Now, re-run the build job a couple of times. You should find a versioned file in S3:

Generalizing Tasks

Our build task does a fine job of building linux binaries for amd64. It

would be great to build for Windows and MacOS too. Let's generalize the task

and reuse it for cross-compiling for different OSes and architectures. We'll use

task params - a set of key-value pairs that are passed to the build container

as environment variables.

[...]- task: buildconfig:image_resource:type: docker-imagesource:repository: golangtag: 1.19-bullseyeparams:+ GOARCH: amd64+ GOOS: linuxinputs:- name: version- name: code-repooutputs:- name: buildplatform: linuxrun:path: /bin/shargs:- -c- |set -euxversion="$(cat version/version)"cd code-repo# Setup dependenciesgo mod tidy# Build and place in the output directory.- go build -o "../build/fetcher-${version}-linux-amd64" cmd/main.go+ go build -o "../build/fetcher-${version}-${GOOS}-${GOARCH}" cmd/main.go[...]

We have succesfully generalized our task. The golang compiler picks up the

GOOS and GOARCH variables from the environment and cross-compiles our binary

for the right OS and architecture.

It's time to make use of this task. Up until now we have been in-lining task

definitions into our pipeline. At this point we'll split out build task

definition into its own YAML file and we'll reference it from the pipeline.

We'll check in that task in the git repo alongside our code in the ci/tasks/ directory.

# code-repo/ci/tasks/build.ymlimage_resource:type: docker-imagesource:repository: golangtag: 1.19-bullseyeparams:GOARCH: amd64GOOS: linuxFILE_EXTENSION: ""inputs:- name: version- name: code-repooutputs:- name: buildplatform: linuxrun:path: /bin/shargs:- -c- |set -euxversion="$(cat version/version)"cd code-repo# Setup dependenciesgo mod tidy# Build and place in the output directory.go build -o "../build/fetcher-${version}-${GOOS}-${GOARCH}${FILE_EXTENSION}" cmd/main.go

Make sure to check that new task file into the repo before continuing.

Now, in the pipeline replace the config directive in the with a file

directive referencing our file and supplying parameters

# pipeline.yml[...]- task: build- config:- image_resource:- type: docker-image- source:- repository: golang- tag: 1.19-bullseye- inputs:- - name: version- - name: code-repo- outputs:- - name: build- platform: linux- run:- path: /bin/sh- args:- - -c- - |- set -eux- version="$(cat version/version)"- cd code-repo-- # Setup dependencies- go mod tidy-- # Build and place in the output directory.- go build -o "../build/fetcher-${version}-linux-amd64" cmd/main.go-+ file: code-repo/ci/tasks/build.yml+ params:+ GOARCH: amd64+ GOOS: linux- put: binary-linuxparams:file: build/fetcher-*

Re-set the pipeline and run a test build. Did it work?

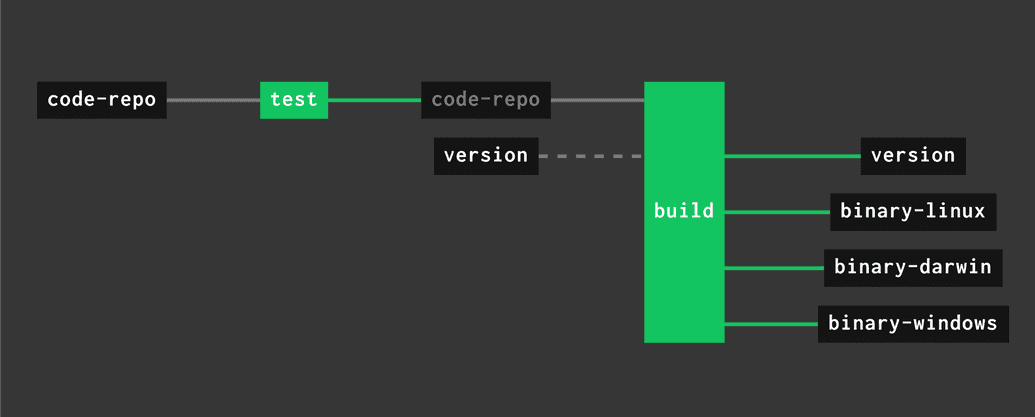

Sure did. We can now reuse it for cross-compilation purposes:

- add artifact resources for Windows and MacOS

[...]- name: binary-linuxtype: s3source:access_key_id: ((AWS_ACCESS_KEY_ID))secret_access_key: ((AWS_SECRET_ACCESS_KEY))region_name: ((AWS_DEFAULT_REGION))bucket: concourse-farm-releasesregexp: binaries/linux/fetcher-(.*)-linux-amd64+- name: binary-darwin+ type: s3+ source:+ access_key_id: ((AWS_ACCESS_KEY_ID))+ secret_access_key: ((AWS_SECRET_ACCESS_KEY))+ region_name: ((AWS_DEFAULT_REGION))+ bucket: concourse-farm-releases+ regexp: binaries/darwin/fetcher-(.*)-darwin-amd64++- name: binary-windows+ type: s3+ source:+ access_key_id: ((AWS_ACCESS_KEY_ID))+ secret_access_key: ((AWS_SECRET_ACCESS_KEY))+ region_name: ((AWS_DEFAULT_REGION))+ bucket: concourse-farm-releases+ regexp: binaries/windows/fetcher-(.*)-windows-amd64

- add 2 respective

putsteps after each new task

[...]- name: buildplan:- get: code-repotrigger: truepassed: [ test ]- get: versionparams:bump: patch- - task: build+ - task: build-linux-amd64file: code-repo/ci/tasks/build.ymlparams:GOARCH: amd64GOOS: linux- put: binary-linuxparams:file: build/fetcher-*+ - task: build-darwin-amd64+ file: code-repo/ci/tasks/build.yml+ params:+ GOARCH: amd64+ GOOS: darwin+ - put: binary-linux+ params:+ file: build/fetcher-*+ - task: build-windows-amd64+ file: code-repo/ci/tasks/build.yml+ params:+ GOARCH: amd64+ GOOS: windows+ FILE_EXTENSION: ".exe"+ - put: binary-windows+ params:+ file: build/fetcher-*

Note the optional FILE_EXTENSION param in the Windows build.

As always, set-pipeline before proceeding, then re-run the pipeline to get

semver-ed binaries for 3 different OSes!